Pilot and implementation programme

The HCPC has had the same model for the approval and monitoring of education programmes for over 10 years. The existing model is not risk based and adopts a one size fits all approach. The strategic objectives and areas to focus on for this programme were agreed by the Education and Training Committee in June 2020.

The pilot started in January and has been designed to test whether the benefits we expect can be achieved within the new model. The pilot will run through to the end of August this year. Between September 2021 and December we will begin to prepare with education providers to fully implement the new model in January 2022.

Strategic aim and objectives

Position the HCPC’s education function to be flexible, intelligent and data led in its risk based quality assurance of education providers

To achieve this, the work programme will deliver improvements in the following areas:

- Achieving risk based outcomes which are proportionate and consistent.

- Operating efficient and flexible quality assurance processes.

- Using a range of data and intelligence sources to inform decision making.

Key changes and benefits of the new QA model

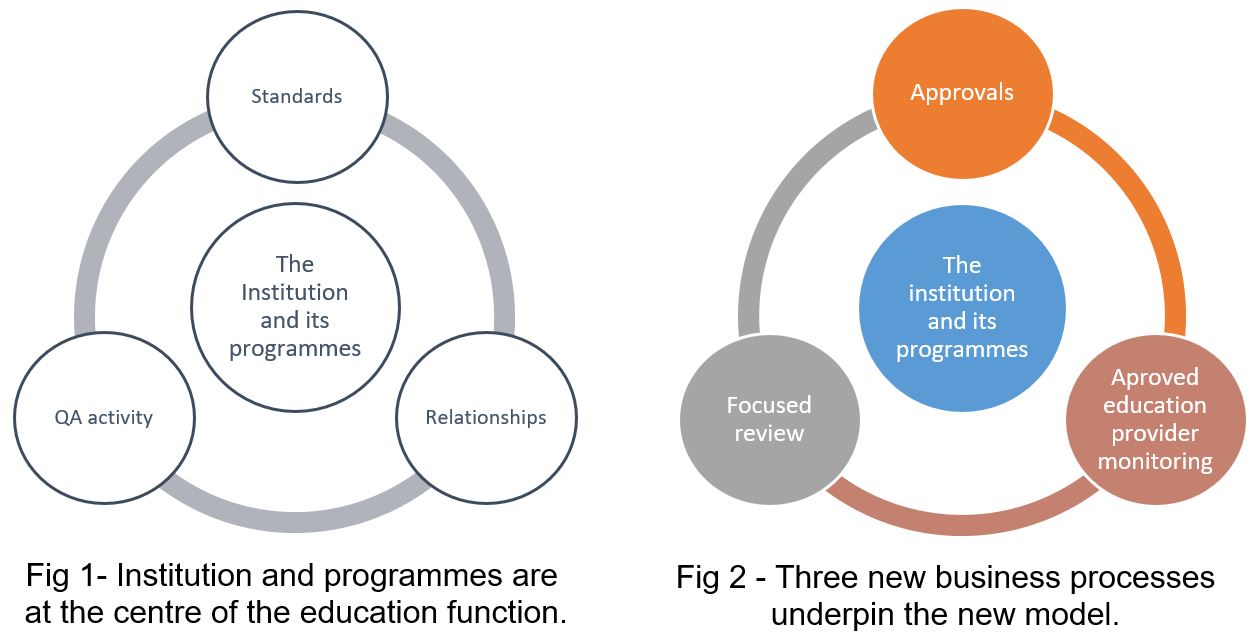

There a four key elements to the new QA model which distinguish it from existing processes.

These reflect key priorities of stakeholders and supports our work strategic objectives:

Key changes and benefits of the new QA model

-

Institution wide approaches to meeting standards which are common across programmes are embedded in the new model. Standards are structured to support this approach, alongside new quality assurance processes.

Expected benefits

- Improved understanding of how standards are met at different levels.

- Consistent outcomes achieved across different assessment activities at the same institution.

- Strategic relationships are created with senior stakeholders within institutions regarding relevant standards.

Relevant assessment measures used within pilot

- Education providers are satisfied in the consistency of outcomes reached through any QA process undertaken.

- Visitors focus more effectively on the appropriate areas of the standards at the appropriate time through each process, in comparison to the current model.

- Visitors are satisfied they are positioned effectively to understand the wider organisation context in any decisions they reach.

- Outcomes data shows that issues were picked and dealt with at the appropriate time and with appropriate contacts, leading to smoother progression through the QA processes.

-

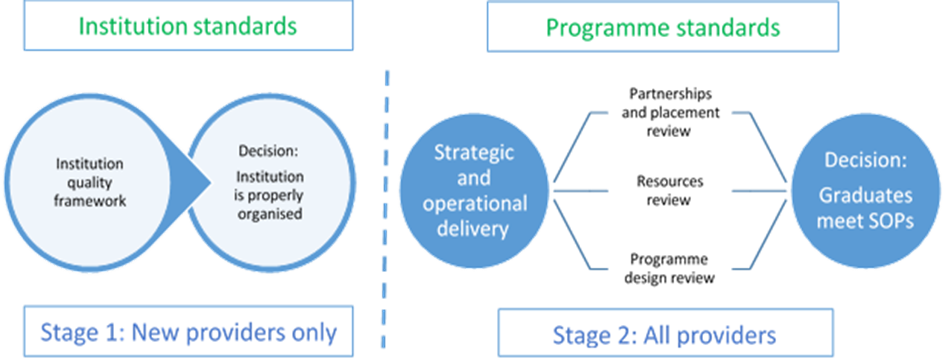

Institutions are now assessed in addition to their programmes to ensure providers are properly organised to deliver education.

The staged approach to assessment allows for more focus on specific areas of the standards. The activity within each stage can be designed more flexibly also, driven by issues identified. For example, most stakeholder engagements will be conducted virtually, with site visits held only as necessary where this supports an effective exploration of issues by visitors.

Expected benefits

- Consistent outcomes achieved across different assessment activities at the same institution.

- Stakeholder are engaged flexibly and with clear rationale provided.

- Site visits only conducted where needed to assess standards.

- Final outcomes achieved in less than 9 months (current SLA).

Relevant assessment measures used within pilot

- Outcomes data demonstrates standards being applied consistently across an institution.

- Education providers are satisfied that the engagement undertaken is proportionate, meaningful and appropriate.

- Education providers perceive a reduction in the administrative burden for them to engage with us.

- Visitors able to perform their role effectively through the structure of engagement used in any QA process undertaken.

- Qualitative data shows that assessment activities had a clear purpose and are applied in a proportionate way.

- Median time to complete process is less compared to current model across range of approval assessments.

- Cost to deliver assessment activities are comparable to existing model.

-

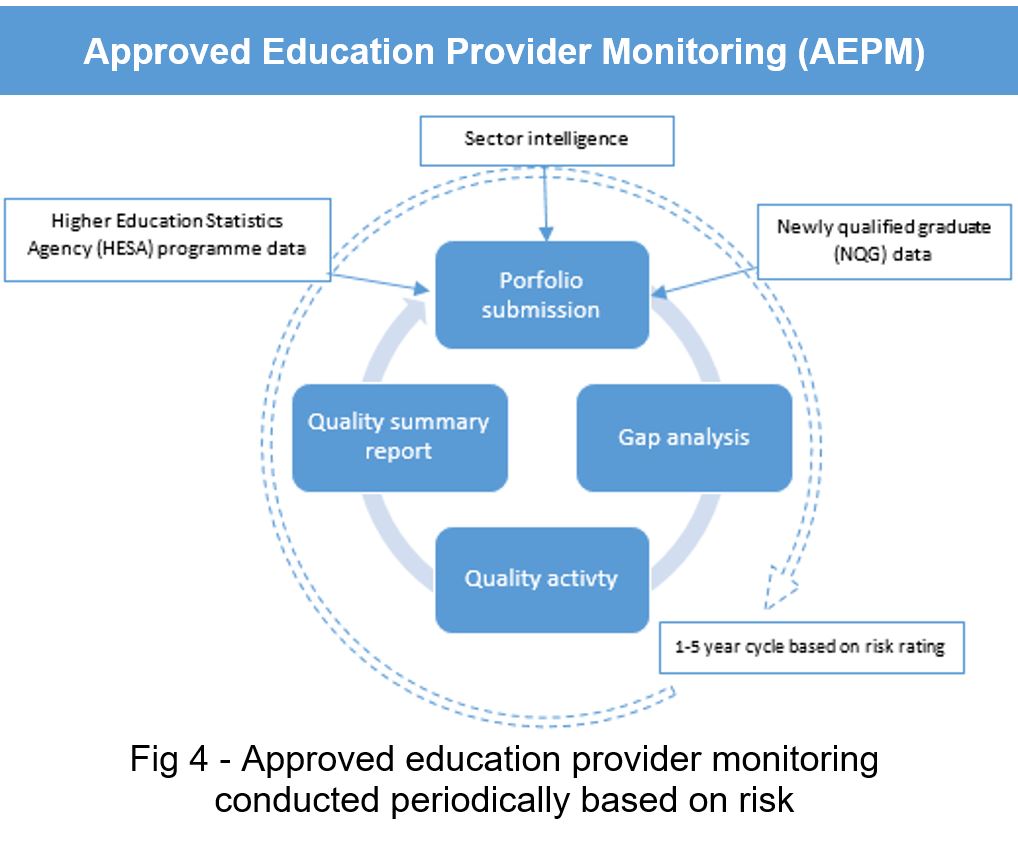

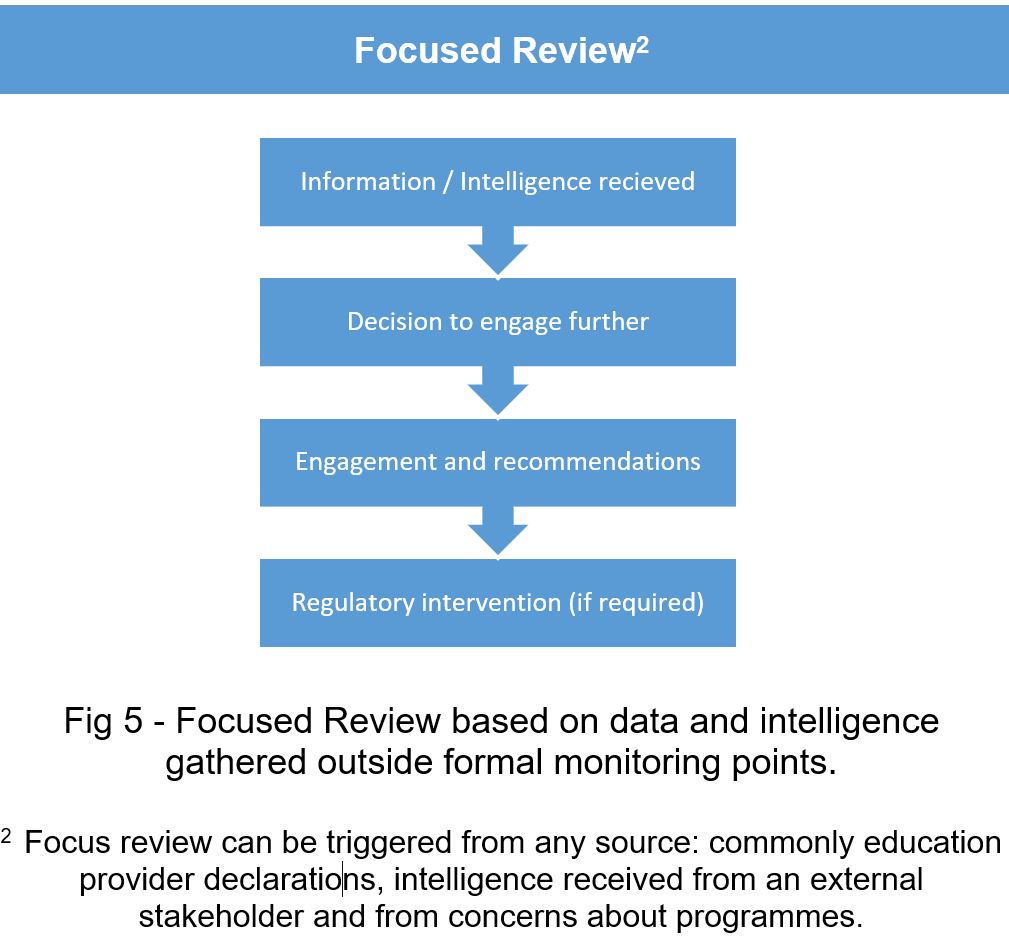

Engagement post-approval will be driven by risks and issues, and our interventions will be tailored to support engagement around these, and where needed, through formal assessment. This is most evident through our approach to the new monitoring processes, where action is based on the issues presented, rather than the being process driven.

AEPM is designed to support meaningful engagement with education providers to understand risks and issues. Institutions will be risk profiled in accordance with an established risk framework to determine the frequency of monitoring conducted. The emphasis will be on understanding how quality is maintained and how programmes are performing. Focused Review provides flexibility to enable more timely and appropriate responses to particular issues outside of formal AEPM monitoring.

Expected benefits

- Monitoring is focused on institutions where there are higher risks.

- Monitoring is tailored to investigate risks which are identified.

- Provider performance is documented and provides clear rationale for risk assessment.

- Providers are incentivised to maintain and improve regulatory performance over time.

Relevant assessment measures used within pilot

- Visitors are supported and positioned to make risk-based decisions appropriately within the QA model.

- Risks are quantified effectively, with higher risk providers appropriately engaged in more intensive and timely regulatory interventions.

- Education providers understand the risk model and assessment applied through the QA processes and are satisfied they are objective and consistently applied.

- Providers can engage with and provide relevant information for the provider performance related data points required through QA processes.

- Cost to deliver assessment activities are comparable to existing model.

-

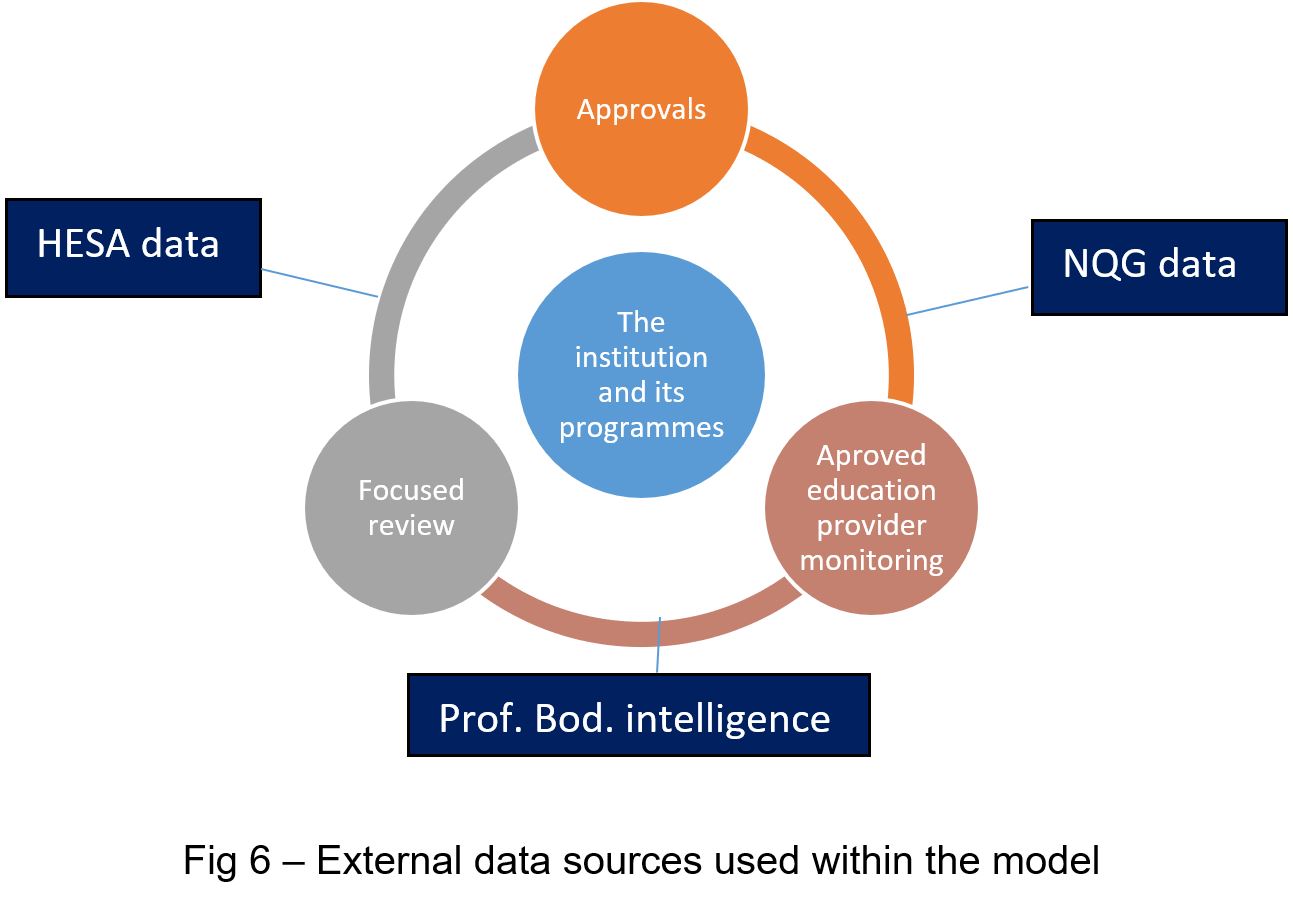

Data and intelligence is embedded into how we understand the risks and performance of education providers across all areas of the proposed QA model. The work programme confines deliver of this to three areas:

- Higher Education Statistic Agency (HESA) data

- Newly Qualified Graduate (NQG) data

- Professional body intelligence

Expected benefits

- More effective risk assessment and profiling of institutions and programmes

Relevant assessment measures used within pilot

- Sector based intelligence is used throughout each process where appropriate, which improves the quality of decision making.

- All provider types are able to engage with and provide relevant information for the provider performance related data points required through QA processes.

- Education providers understand the risk model and assessment applied through the QA processes and perceive them to be objective and consistently applied.

- Visitors are supported and positioned to make risk-based decisions appropriately within the QA model.

- A risk model is delivered, which allows risks to be quantified effectively, with higher risk providers appropriately engaged in more intensive and timely regulatory interventions.

Overview of pilot evaluation methods

During the pilot we will evaluate how the new model is working and the experience of education providers engaging with it. This will enable detailed understanding of how the benefits expected have been realised, and where further development of the model, processes and guidance is needed.

The following feedback and data points will be gathered to inform this activity:

A desktop review of outcomes and engagement for each assessment will involve:

- A review of visitor findings

- A review of visitor and education provider engagement

- A review of executive coordination and process steps

All participants involved in any pilot activity will be asked to complete surveys during and at the conclusion of each assessment.

Different surveys will be produced for education and visitors.

Structured workshops will be held to gather in depth insight into participant experiences and perceptions of the assessment they have been involved in.

Separate workshops will be held with both visitors and education providers, however this could also involve wider stakeholders if relevant (e.g. professional bodies, practice-based learning partners).

The significant differences between the existing and new QA models will make opportunities for direct comparative data points limited.

However, these will be used where possible, particularly in relation to:

- Duration of processes and timeliness of outcomes

- Quality of visitor findings

- Experience of participants

- Costs

Work programme milestones

Milestones

-

This phase was carried out to prepare for pilot delivery. At the conclusion of this phase the following has been achieved:

Completed during this phase:

- Project documentation, project planning and governance arrangements

- Detailed business process mapping for each process element of the model

- Data and intelligence strategy defining how these are used through model

- Risk-based decision-making framework for use through monitoring

- Pilot evaluation and continual development strategy and activity schedule

- Early adopter slots for fourteen education providers filled, pilot submissions scheduled

- Reorganisation of standards to support institution and programme level assessment

Commenced during this phase:

- Business side support for development of new MS Dynamics/Sharepoint implementation

- Guidance and information for early adopter education providers in pilot

- Discussions with HESA and Data and Intelligence regarding data sharing and analytics of programme and NQG data

-

Three cycles are delivered in this pilot phase, each being used to test whether the various elements of the model are delivering expected benefits.

Cycle 1 focuses mainly on the new approval process, with cycles 2 & 3 also introduces the new monitoring processes.

Each cycle will include an evaluation and improvement schedule to support further development of the new QA model, processes, guidance, user roles, systems and service elements (see section C above for our evaluation techniques).

Progress reporting on cycle outcomes will be reported to key stakeholders during this pilot phase. Scheduling for new approvals will be carried out solely within the new model from May 2020 onwards.

This enables all approval activity in the next academic year (September 2021 onwards) to be conducted within the new model exclusively, and for full implementation of all elements of the model to be achieved by January 2022.

-

Following completion of the pilot cycles, the project board for the work will discuss readiness for a final report to the Education and Training Committee in September 2021.

That report will provide the Committee with the necessary information required to proceed (or not) with full implementation.

-

Assuming approval is given for implementation, work streams will commence to plan for full implementation of the new model in January 2022.

This will involve transition period planning, extensive communications to the sector and also internal department role and structure reorganisation.

Approval processes will already be scheduled in at this point and may result in some assessment activity already commencing within this process.

At this point, the use of external data sources (HESA, NQG) also commences.

-

At this point, all education providers will be scheduled for approval and monitoring engagement within the new process.

All visitors will be prepared to undertake newly revised business processes and our internal and external systems and services will be operational.

Timescales to assess all education providers through AEPM to be determined by the Education and Training Committee as part of their implementation decision in September 2021.

Frequently asked questions

Quality assurance model

-

The new model is more focused on the institution (rather than programme), with the intention to reduce burden and increase consistency. We will use data and information from you and external sources to come to risk based activities and outcomes. We are less focused on compliance and change, and more focused on the way your institution is performing. We will also focus more on working together to achieve outcomes with you through iterative flexible processes.

-

We will continue to assess your provision against our standards, drawing on professional experts in our process. We will still need to approve new programmes before they run.

-

Internally, you should still consider and record changes which impact on the quality of your provision, but you do not need to report routine changes to us. We are no longer running a ‘major change’ process. Instead, we ask that you reflect on changes through your monitoring portfolio when we request it. We will be satisfied that your agreed approaches mean changes will be well managed by you, and we will actively monitor external data and information sources to assure your ongoing performance.

-

We may ask you to supply a small amount of data, but you will no longer need to provide an ‘annual monitoring’ submission. Instead, we will ask you to produce a portfolio focused on the performance of your institution. This will be required on a period defined by a performance and risk scoring exercise.

-

Education providers will define at least one of each of the following contacts:

Quality assurance – individual(s) who have oversight of HCPC-approved provision from a quality and enhancement perspective. We will interact with these contacts on matters of quality on a granular, regular and ongoing basis

Senior – individual(s) who have strategic oversight of HCPC-approved provision. We will work with these contacts in relation to strategic matters, and keep them informed of significant matters of quality related to the institution

Programme – individual(s) who have professional oversight at a programme level. We will work with these contacts in relation to professional matters, and keep them informed of matters of quality related to the programme or professional level

-

We take data and information from providers and external sources, and use this data to form a performance score for each provider. This score is arrived at using methodology which we will publish so we are making transparent decisions. The score will be used as part of the quality and performance picture, alongside other evidence and information.